Meta-Data data driven testing!

If you are involved in test automation, you'll know the importance and challenges of managing data. In my experience, developers are the worst testers mainly because they fail to understand the concept of data driven testing. Even good developers, who care to test, hard code test data in their unit test cases. To me, coming up with a good Data Strategy is more than half of test automation challenge.

As part of my profession, I teach and train students in SOA lifecycle quality. Over period of time, I have realized that my basic theory of iteration (refer to my Data Driven Testing (DDT) blog) doesn't really explain all different types of data that one needs to take into consideration in order to define a good test strategy. It only explains, at a high level, how one can extract randomness and variability from a test and move it into external data asset.

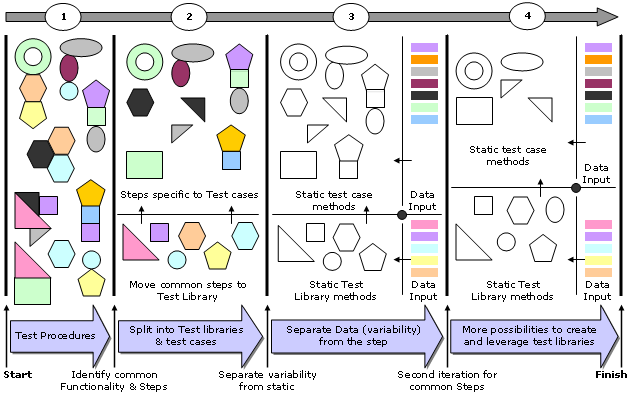

Modular testing is the key to re-usability and lower maintenance. Apart from creating test libraries and using building blocks, it is also important to understand how to organize different types of data outside of a test script.

Following diagram illustrates how different types of data can be organized around a test procedure/logic. Different types of data must not be cobbled together, because it almost always leads to increased maintenance costs and chaos.

- Removing Meta-data from the test logic not only makes the logic more reusable, but also lowers its maintenance. Change in meta-data can be easily handled by updating appropriate properties files. Example: SQL scripts, tag names, component names. Meta-Data has 1-1 relationship with the test case.

- Input Test Data is core to the business functionality/scenario. Example: username and password to login into a website. A single test case can have multiple copies of the same data, generally referred to as rows inside a dataset.

- Output Test Data is used for validation purposes. Validation of responses can be done using regular expressions or against the actual data. In case, generic validations (like regular expression checking size of the confirmation id) are not used, output data is mapped 1-1 with the input data.

- Server Environment Data refers to the endpoint data which varies between environments. Ability to change this data (at the execution time) allows the same set of test cases to be run against multiple environments.

- Client Environment Data refers to local environment specific to the test framework and project.

- Staging data allows the same test asset to be staged in different ways.