Data Driven Testing (DDT)

We all have heard about data driven testing and how it can improve our ROI exponentially and at the same time reduce maintenance costs. We all believe in it, but we still don't practice it to the extent we should. This blog describes the concepts using a pictorial notation in an effort to bringforth the structural thinking that one needs to implement DDT.

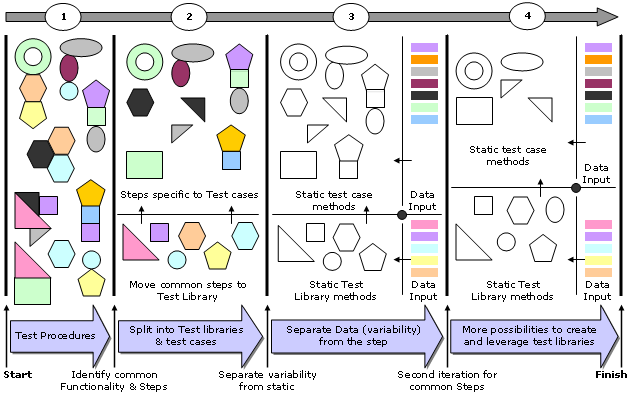

A picture is worth thousand words:-

Step 1:

You start your automation activity with some test procedures in hand. Refer to column 1. A test step is reflected by a donut, hexagon, triangle, etc. These different building blocks combine togather to form a structure, which depicts a test case. Different shapes and their combinations reflect various test cases in the test suite. As you can see, a test case can have many different steps as part of its procedure.

Step 2:

In next step, you start automating one test case at a time. When you encounter a second test case which contains the similar step that you automated before, you move that particular step into what we call "Test Library". You must filter out all common functionality into test libraries over time. Refer to column 2. It shows how you can take common shapes and make library out of them.

Step 3:

As part of Step 3, you separate variability from static functionality. This is the most important step in DDT. Most of the times the variability is the data input; but you may have more variability in your system that you may also want to identify and manage it as data. More the static functionality you can identify, more you'll be able to leverage your automated scripts across datasets. Refer to column 3. All colors depict data. In this step you also make your test libraries data driven.

Step 4:

Once you separate the variability from the core (static) functionality, you'll be able to identify opportunities for more test libraries or leverage existing libraries. Go through this iteration to make sure that you are leveraging your test case libraries to the fullest. Convert more common functionalities into libraries, if required. Refer to column 4.

The more you will think in terms of DDT and leveraging libraries, the easier it will get to automate more in less time. Apart from achieving higher productivity, you will realize that your automated test cases are a lot easier to maintain. Whenever some common functionality changes, you just need to update the corresponding test library. Just imagine updating hundreds of test cases, in case you are not using libraries. Similarly, when some data requirements changes, you will not have to change your automated scripts - just update your datasets and you will be done.

Final Thought:

Tools only provide mechanisms to accomplish DDT or create test libraries, but if we choose not to practice these concepts, we end up creating large autmation code which is both hard to maintain and sustain.

3 comments:

Rajeev that is a great explnation of DDT. The diagrams are self explainatory.

http://www.sqaforums.com/showflat.php?Cat=0&Number=351566&page=0&vc=1&PHPSESSID=#Post351566

we should chat rajeev....

sorry...i'm 'thekid'

Post a Comment